How AI Can Help Us Understand the World—Or Radically Misinterpret It

For the first time, neural networks are imagining things they’ve never seen before.

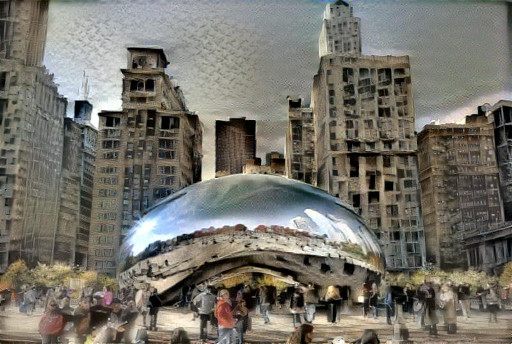

Already, Americans are learning not to believe what they see on TV—but new developments in artificial intelligence could give them even more cause for concern. At the moment, digitally manipulating an image is very possible, but time-consuming, expensive and often quite ineffective. Brand new AI systems, however, can generate “fake” videos near-instantaneously, using advanced neural networks that “translate” ordinary circumstances into extraordinary ones. A street freckled with house-cats is suddenly beset by cougars. A Hawaiian street scene is covered in snow. Your face is on somebody else’s body, doing things you might never do, in places you’ve never been.

This technology is improving at a breakneck speed, as neural networks learn to imagine things without ever having seen them.

Nvidia is a billion-dollar technology company based out of futuristic headquarters in palm-bedecked Santa Clara, California. The company is a market leader in AI and machine learning, with soaring stock prices and a finger jammed in almost every self-driving car initiative pie. In October, the company made headlines for its face-generating technology, in which little bits of celebrities are spliced together to make new, convincingly human faces. A nose might remind you of Eva Longoria or a particular set of eyebrows seem somehow familiar, but each face is recognizably unique. The uncanny valley is nowhere to be seen. Similar technology has been used very recently to generate pornography that grafts people’s faces onto different people’s bodies.

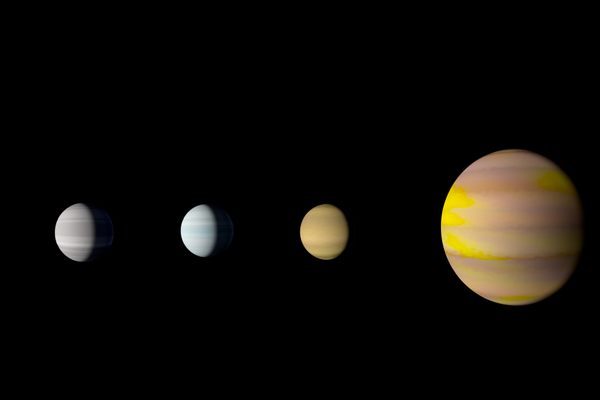

Another brand new framework lets machines imagine sunny streets in different weather conditions, including snow, rain or darkness. Summer street scenes become winter wonderlands; dark nights are immediately illuminated. The same framework can translate one species of dog into another (a more fun application) or turn house cats into cougars and leopards into jaguars. This image translation uses a kind of algorithm called a generative adversarial network, or GAN. Two neural networks work alongside and together with one another—one generates an image or a video, using the data available, and the other tells it whether it’s any good. Normally, neural networks need to have seen an exemplar ahead of time—a pair of images of a street, say, where one is snowy and the other is not. These GANs are some of the first that can, for example, imagine snow on a street even without having seen it before.

There are obvious helpful applications to this exciting technology. Speaking to The Verge, Ming-Yu Liu, one of the researchers behind the network, said that it would be important particularly with regard to self-driving cars. “For example, it rarely rains in California, but we’d like our self-driving cars to operate properly when it rains. We can use our method to translate sunny California driving sequences to rainy ones to train our self-driving cars.” But being able to produce “fake images” like this at such speed, and with so little data, offers up a litany of worrying misapplications, particularly in terms of the news. It’s not hard to imagine how similar technology could be used to downplay climate change or devastation in the wake of a natural disaster like a hurricane or forest fire, or mislead people far away about rescue and clean-up attempts.

Already, technological developments makes it possible to remove Donald Trump’s hair in real time or fake a speech by Barack Obama. For now, these are recognizably fake. Machines don’t yet seem that good at generating images good enough to fool us altogether. As it improves, however, scary consequences quickly spring to mind. The apparent authenticity afforded to us by “citizen journalists” might quickly be grappled back, as pictures and videos show things that never happened or don’t exist. When asked by Atlas Obscura about more nefarious misuses for the technology, and how they might be prevented, Liu declined to comment.

Some scientists in the field are actively trying to use these advances for good. The MIT Media Lab recently unveiled its new project with Unicef, Deep Empathy. This uses a kind of deep learning method called neural style transfer to generate images that impose scenes of devastation on neighborhoods around the world. Suddenly, the chaos and displacement in Syria is on your very block. It’s hoped that the project will help people to conceptualize international conflict for themselves, making news about natural and human disasters comprehensible in a way it may not be at present.

“In addition to the Syria crisis, we also experimented the idea on different types of disasters, such as earthquake and wildfires, and got promising results,” researcher Pinar Yanardag says. They’re currently asking people to vote on which images inspire more empathy, so that future AI will be able to recognize empathetic images. “This algorithm then can be used by charities to help them include pictures to their campaigns that has a better chance to increase donations!” says Iyad Rahwan, another member on the team.

But this technology too is ripe for misuse, team member Manuel Cebrian says, even while its focus is on having a positive impact. Still, there might be another application that comes out of it—fact-checking. “We believe that the techniques we are developing could potentially be used to tell a real photo from an artificially generated one,” he says. “This same technology could be used to filter fabricated images that malignant elements are trying to spread on the Internet.”

Follow us on Twitter to get the latest on the world's hidden wonders.

Like us on Facebook to get the latest on the world's hidden wonders.

Follow us on Twitter Like us on Facebook